You are missing trading opportunities:

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

Registration

Log in

You agree to website policy and terms of use

If you do not have an account, please register

schnappi, naturally the methodology for identifying (and eventually remediating) bad ticks and data holes is itself an evolving process. I started out with the standard approach of externally defined filters (if price delta > X then we have a bad tick, etc) and the problem with that is you force the dataset to conform to your perceptions and expectations of what the price data ought to look like which may or may not be representative of the intrinsic statistical characteristics of the price evolution dynamics of the financial instrument.

Eventually my method iterated to the point where I simply require the data to be self-consistent with the intrinsic characteristics of the financial instrument itself (currency pair in this discussion, but it applies across the board). What this means in practice is I take a stretch of "known good market data" and robustly characterize its attributes: time gaps, price gaps, etc. This generates a profile of natural/characteristic price evolution for the currency pair (which will be broker specific as each broker has their own subtle "pump and pulse" algorithms to keep the price feed "alive").

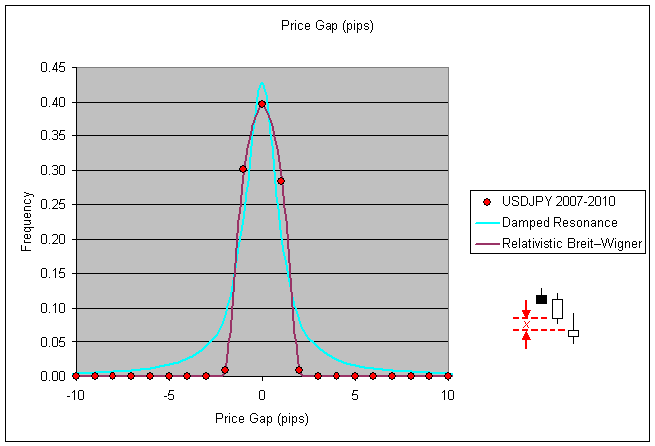

Armed with this characterization data I then have the script iterate thru the older, more questionable, time periods assessing each bar on merits of whether it is statistically consistent with the inherent nature of the currency pair. For example lets say we observe that the price gap which occurs between successive M1 candles for the USDJPY is generally <3pips. That is to say the close bid for candle N+1 is generally anywhere from -3 to +3 pips away from the opening bid for candle N.

This is an easy metric to generate the statistics for, just analyze the close(i+1)-open(i) for your data and generate a histogram: (note I specifically/intentionally exclude price gaps which come from candle pairs which themselves have a time gap between them for this phase of the characterization)

Now if you assess the historical data for some prior year, say 2003, and your script observes a price gap between successive candles (no time gap) that is well outside the statistically consistent range (for this example let's say 50pip gap is detected) then your script has cause for considering the price data under evaluation at that point in time to be suspicious.

What you do with your suspicious candles (the remediation phase) is a whole other question, but here is an example:

There are dangers and pitfalls to be avoided in remediating data, you can unknowningly cause more harm than good if you aren't careful in how you define your eviction list of bad candles as well as their replacements (I consider simply deleting the candle from the hst record as a replacement policy too, replacing a bad price gap with an artificial time gap in that case).

Regarding those 'suspicious' candles. Many brokers have weird price spikes for various reasons. In your example above, how do u know that it's a obvious "bad tick" data? Spikes like that do happen...

Phillip, that's some interesting stuff, but it seems too risky and time-consuming to me. I prefer to use sources I can trust rather than try to 'fix' sources I can't...

Regarding those 'suspicious' candles. Many brokers have weird price spikes for various reasons. In your example above, how do u know that it's a obvious "bad tick" data? Spikes like that do happen...

Re: how do u know that it's a obvious "bad tick" data...in this case with this broker a detailed analysis of 3+yrs of data showed that such a spike never occurred, not even once, in over 1 million candles of data. Occam's razor...what is more likely to have occurred at 04:29 August 2, 1999: the market spiked upwards 60pips, the candle closed at the High of the candle and then the very next tick marking the opening price of the very next candle the market move back down by 50pips and the subsequent market activity had no more volatility than the candles preceding the spike? Or that this particular candle contains a spuriously bad tick that can be legitimately (with confidence) eliminated?

You'll notice from the first graph in my post above, for 3+yrs of data the frequency of a close-to-open gap exceeding just 4pips for this pair is zero. In this instance I felt comfortable branding the 50pip outlier as being an obvious outlier and evicting it from my copy of the historical trading record.

Re: trusted sources versus fixing...since you can't trust any historical record for the ask price when using MT4 platform the very notion of having trustable versus untrustable historical data is really one of personal comfort in my opinion. It depends on your specific trade strategy of course, in my case the exact specifics of the historical record are irrelevant to my EA. If a trade strategy requires precision and accuracy in the historical records in order for it to be profitable then it will likely not be profitable for short positions in any currency pair as all ask pricing is fabricated in backtesting by assuming a fixed spread (which isn't historically accurate or representative of actual market conditions).

So if you are going to make an EA which is robust enough to deal with the realities of ask prices being contrived in backtesting then you've probably created an EA that is capable of dealing with spurious/erroneous price data in historical records anyways. I could be wrong, wouldn't be the first time, but my approach to historical data is to use it with the purpose of making my EA agnostic to it.

Re: how do u know that it's a obvious "bad tick" data...in this case with this broker a detailed analysis of 3+yrs of data showed that such a spike never occurred, not even once, in over 1 million candles of data. Occam's razor...what is more likely to have occurred at 04:29 August 2, 1999: the market spiked upwards 60pips, the candle closed at the High of the candle and then the very next tick marking the opening price of the very next candle the market move back down by 50pips and the subsequent market activity had no more volatility than the candles preceding the spike? Or that this particular candle contains a spuriously bad tick that can be legitimately (with confidence) eliminated?

Ok, but this is an extreme case. What if it was ~30 pips and u had 3 such cases in all those years of data. How do u know then? MY point is that there will be cases where it's not obvious and u have to guess.

Re: trusted sources versus fixing...since you can't trust any historical record for the ask price when using MT4 platform the very notion of having trustable versus untrustable historical data is really one of personal comfort in my opinion (...)

But that's not the point. I am talking of trusted sources that u can just use as is and not go through all this trouble. Lack of Ask price (fixed spread) in MT4 Tester has nothing to do with this since it affects both bad sources, good sources and fixed sources... It's a completely separate issue.

Phillip: In my opinion that's the way to go. I think bad ticks can be identified quite safely by analysing price-jumps in combination with the following volatility. If the market shows a spike and no abnormal volatility right on the next M1-bar then it's a bad tick by very high chance. The screenshot you're showing above is such an example.

You already came across the more important issue: defining bad ticks is one thing - correcting them is another. In my opinion there are 2 ways to do this.

1st: identify bad ticks and fix them by guessing. If it's the open that suddenly gaps +50 pips then set it back to say +3 pips and so on. --> may be not precise but easy to implement

2nd: indentify bad ticks and compare the prices with another data stream. This would be a more precise way - but way more difficult to implement.

Gordon, I avoid guessing by relying on the statistics themselves to enforce the historical records to be self-consistent. I think I recall you mentioning you were a fundamental statistics driven trader in another post so you'll understand what I am speaking to when I say the premise is to minimize the Kullback–Leibler divergence between the suspect dataset and the known good dataset (what you would label as the "trustable source").

Only in the case of forex we would use the generalized normal distribution instead of the normal gaussian distributions since the kurtosis of financial markets is typically larger than zero. I doubt I am saying anything new to you, but I am just expanding on the premise of my methodology for identifying and evicting suspect data with confidence (the statistical kind, not the machismo kind ;)) so you know my selection criterion is not something as simplistic (and flawed) as setting high/low bandpass filters and ramming the data thru.

Naturally there are assumptions made regarding the stationarity of the financial instrument's pricing data over the "trusted source" time period when handling it as a stochastic process. But provided I am willing to require my EA codes to confine their activity to periods of equivalent stationarity in future timeseries then self-consistency is inherent to the approach. Since the length of the "trusted source" data is finite it sets an upper limit to the timespan in which we can expect past cyclostationary processes captured in our characterization to be represented in future time series.

But there is a higher-level point to be reinforced here that I think we both can agree which is that regardless the "historical accuracy" of the data or the length in time represented by the data, the value to come from using historical data and backtesting to "optimize" a trade strategy is entirely up to the person sitting behind the keyboard knowing the questions that need to be answered by backtesting. Quantity does not trump quality, neither the length of the historical data nor the hours spent ramming an optimization thru iterative backtesting will answer the questions that need to be answered if the question was not well defined in the first place. How long did it take you to realize that "what parameters will give me maximum profits or minimum drawdown?" was NOT the question to try and have answered by backtesting :)

Schnappi, it depends on what your goal is in terms of what you are trying to achieve. Do you want a dataset that all the more reflects historically accurate pricing nuances or are you interested in the number of time series your trade triggers and money management are tested over? There is no wrong answer, everyone approaches market trading differently of course. Personally I don't use historical data for linear timeseries testing, I know I am not alone in this but I freely acknowledge that the majority of folks don't. I use historical data to extract the raw statistical nature underlying a currency pair's characterstics (Lévy–Itō decomposition) which I then use to create statistically equivalent but not identical timeseries of historical data by way of monte carlo routines. Then I backtest with these fabricated hst timeseries. (this isn't light reading material but I include the link perchance you aren't already aware of it and you wanted to read up on it)

Just like weather forecasting, you don't forecast tomorrow's weather by focusing on knowing today's and yesterday's weather aspects to ever higher degrees of precision and accuracy but rather you create models that capture the inherent statistics of the evolution of weather metrics (temp, humidity, pressure, etc) and then you intentionally alter the initial conditions and let the monte carlo run forward in time, you do this a few thousand times and average the results (in spirit, the actual details are more complicated naturally) and that formulates the basis for tomorrow's forecast "lows in mid 30's and highs in upper 60's".

If you are approaching the idea of filtering out bad ticks from older historical data because you simply want to backtest 10yrs of data instead of 3yrs then I will just caution you that you may be falling into the "quantity will drive quality" fallacy that many backtesters fall for at some point in their careers. I was there once, stuck there for a while too. Was convinced my losing codes would/could become winners if only I had longer historical data to optimize the backtest over. Maybe it turns out to be true for some and having that lengthier backtest really does the trick for their forward-testing profits but for me it turned out to just be fool's gold (and a lot of wasted time).

If you are wanting lengthier historical records because you are employing autocorrelation functions and the likes then you are probably just as well off creating your own series of virtual/synthetic pricing data to hone your latches and phase locks as then you can directly control the robustness of the latching protocols by way of how you fabricate the timeseries in the first place.

I don't claim to have the right answers, just saying if we don't start with the right questions in the first place then we can't hope for the answers to be of any use to our overall goal (profits, presumably).

You're saying you extract the statistical characteristics of a market and then create synthesized market data and run monte-carlo simulations on this data. That's extremely interesting, but unfortunately that's beyond my skills. I've started to read the two links you posted but I must say, that I'm not able to understand them. I do have a university background but not in mathematics or something similar. My approach is a bit more, let's call it "hands-on" - at least from the point of view of a discretionary trader. Really sad. You guys probably don't offer internships, aren't you?

I'm aware of this what you called "quantity will drive quality-fallacy" - since I also already lost a lot of time being stuck at this point. Although I wouldn't say that I would have wasted this time, but that's a different point. No, the reason why I entered this discussion is that at the moment I'm enhancing my development process itself. I'm going to acquire additional data to increase my scope. We're also working on a solution to automate backtests with MT and so on.

schnappi I just now saw your post, I can appreciate the sentiment and do agree that at first pass it all seems rather complex (to be sure it is not simple) but don't ever let yourself feel like it isn't something you can't master on your own terms someday in the near future. You don't need a university degree to understand and use this stuff, you just need the time/dedication and personal drive to keep at it and you will inevitably master the topics.

It helps to be made aware of some of the terms and topics that are out there in the existing profesional investment finance industry, that will go some distance to shortening the learning curve, and I hope my posts have assisted you in that capacity. I wouldn't be where I am at now if it weren't for the fact that other's before me offered their shoulders for me to stand on.

We "home grown" quant traders all have a lot in common, our backgrounds are extremely diverse and not many of us have higher education in finance or programming, but what we lack in formal education we aim to make up for with tenacity and ambition. It worked for Thomas Edison...admittadly not the best example from an ethics/morals standpoint, but his 10,000 lightbulb prototype example is the moral of the story.

You will come to recognize, and perhaps you already have, that your personal rate of progress is iterative in nature. You learn more about coding techniques and your codes get more sophisticated, you learn a more about trade strategies that have and haven't worked in the past and you hone your own strategies a bit more, you learn about risk management versus risk measurement and you start to grapple with your risk of ruin in ways you weren't even aware you needed in the first place.

And just because my approach is seemingly complex does not mean that the complexity is a neccessity...far simpler and far less complex approaches may be superior in every way...I'm just not clever enough to have figured out how yet. There are a lot of foolishly complex ways to make a lightbulb, and more complex methods do not guarantee a better lightbulb.

So don't be put off by any perceptions you might have between the complexity/simplicity of your approach versus the lack thereof in mine...you may very well be on the right track while I am off in left field.

1005phillip: 2010.03.17 18:38

This (attached mq4 script) is what I use to get the job done, it is not pretty (the code that is) and could do with some cleaning up and changing out the repetitive if's for switches, etc.

Note: W1 candle open times are automatically aligned to first day of trade week (Monday) and MN candle open times are automatically aligned to the first open market day of the new month